Archive for category Powershell

Subscribing to SQL Server WMI Events

Posted by sqlscope in Powershell on September 22, 2013

As mentioned in http://technet.microsoft.com/en-us/library/ms181893.aspx the WMI provider for Server Events allows you to use WMI to monitor events in SQL Server. The events you can monitor are listed in sys.trigger_event_types (http://technet.microsoft.com/en-us/library/bb510452.aspx). Using SQL Server functionality you can monitor these events using DDL triggers and event notifications. SQL Agent WMI alerts can also be used to react to these server events.

An alternative, much quicker way to monitor these events is through Powershell. This short scripts allows you to monitor UPDATE_STATISTICS events:

$computer = “yourcomputername”

$sqlevent = 'UPDATE_STATISTICS'

$query = “SELECT * FROM $sqlevent”

$filename = “c:\temp\" + $sqlevent + ".csv”

# Set up the action that should be taken when the event is raised, in this case writing to a CSV file

$action = { $event.SourceEventArgs.NewEvent | select-object -property * | export-csv $event.MessageData -NoTypeInformation -Append }

# Register for the event - if you're using a name instance replace MSSQLSERVER with the instance name

Register-WmiEvent -Namespace ‘root\Microsoft\SqlServer\ServerEvents\MSSQLSERVER’ -Query $query -Action $action -MessageData $filename -Computer $computer -SourceIdentifier “$sqlevent $computer”

# Check that the registration has been successful

Get-EventSubscriber

# Now run an UPDATE STATISTICS command then examine the c:\temp\UPDATE_STATISTICS.csv file

# When you’re finished unregister from the event

Get-EventSubscriber | Unregister-Event

# or use this to unregister from specific events

Unregister-Event -SourceIdentifier “$sqlevent $computer”

So just by setting five variables and making one call to Register-WmiEvent you have a monitoring solution not just for UPDATE STATISTICS but also for any of the other SQL Server events you wish to monitor.

Using Powershell to obtain storage utilisation

Posted by sqlscope in Powershell on May 5, 2012

From SQL 2008 R2 SP 1 onwards the sys.dm_os_volume_stats dynamic management function returns the storage details for disk volumes:

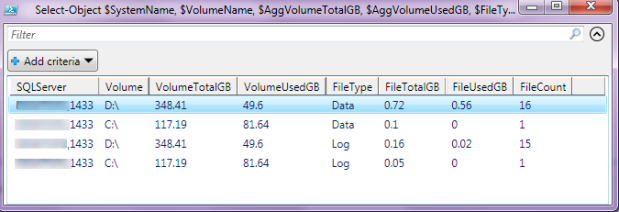

It’s possible to generate various type of storage utilisation reports using this DMF.

For prior versions we would have to query the file systems using non-SQL methods (eg VBScript, CLR stored procedures, extended stored procedures). We’d combine this file system data with our database usage data to get an overall storage picture. Powershell along with the SQL Server Management Objects (SMO) provides another way to obtain this information. This post shows that the approach is not complicated and, as a bonus, provides us with a very flexible way of presenting the results. The code is as follows:

# Tested using Powershell V2

# SQLServer can be default instance, named instance or [server,port]

Param([string]$SQLServer = "YOURSERVER,1433")

$WinServer = ($SQLServer -split {$_ -eq "," -or $_ -eq "\"})[0]

# Fetch volume information

$VolumeTotalGB = @{Name="VolumeTotalGB";Expression={[Math]::Round(($_.Capacity/1GB),2)}}

$VolumeUsedGB = @{Name="VolumeUsedGB";Expression={[Math]::Round((($_.Capacity - $_.FreeSpace)/1GB),2)}}

$VolumeArray = Get-WmiObject -Computer $WinServer Win32_Volume | Where-Object {$_.FileSystem -eq "NTFS"} | `

Select-Object Name, $VolumeTotalGB, $VolumeUsedGB

# Fetch data and log file information

[System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SqlServer.SMO") | Out-Null

$Instance = New-Object "Microsoft.SqlServer.Management.Smo.Server" $SQLServer

$FileTypeData = @{Name="FileType";Expression={"Data"}}

$FileTypeLog = @{Name="FileType";Expression={"Log"}}

$FileTotalGB = @{Name="FileTotalGB";Expression={[Math]::Round(($_.Size*1KB/1GB),2)}}

$FileUsedGB = @{Name="FileUsedGB";Expression={[Math]::Round(($_.UsedSpace*1KB/1GB),2)}}

$Volume = @{Name="Volume";Expression={}}

# Data

$FileArray = $Instance.Databases | Where-Object { $_.IsAccessible } | `

ForEach-Object { $_.FileGroups | ForEach-Object { $_.Files | Select-Object $FileTypeData, FileName, $FileTotalGB, $FileUsedGB, $Volume } }

#Log

$FileArray += $Instance.Databases | Where-Object { $_.IsAccessible } | `

ForEach-Object { $_.LogFiles | Select-Object $FileTypeLog, FileName, $FileTotalGB, $FileUsedGB, $Volume }

# Append volume information to data file information

$FileArray | ForEach-Object {

$FileName = $_.FileName

$_.Volume = $VolumeArray | Where-Object { $FileName -Match [regex]::Escape($_.Name)} | Sort-Object Name -Descending | Select-Object -First 1

}

# Sum the file size and space used by volume and file type and output the results

$SystemName = @{Name="SQLServer";Expression={$SQLServer}}

$VolumeName = @{Name="Volume";Expression={($_.Name -split ", ")[0]}}

$AggVolumeTotalGB = @{Name="VolumeTotalGB";Expression={($_.Group)[0].Volume.VolumeTotalGB}}

$AggVolumeUsedGB = @{Name="VolumeUsedGB";Expression={($_.Group)[0].Volume.VolumeUsedGB}}

$FileType = @{Name="FileType";Expression={($_.Name -split ", ")[1]}}

$AggFileTotalGB = @{Name="FileTotalGB";Expression={($_.Group | Measure-Object -Property FileTotalGB -Sum).Sum}}

$AggFileUsedGB = @{Name="FileUsedGB";Expression={($_.Group | Measure-Object -Property FileUsedGB -Sum).Sum}}

$AggFileCount = @{Name="FileCount";Expression={($_.Group | Measure-Object).Count}}

$FileArray | Group-Object -Property {$_.Volume.Name},{$_.FileType} | `

Select-Object $SystemName, $VolumeName, $AggVolumeTotalGB, $AggVolumeUsedGB, $FileType, $AggFileTotalGB, $AggFileUsedGB, $AggFileCount | Out-GridView

Please observe the line continuation back ticks.

There are very few actual executable lines in this script. The only parameter we set is the one for the SQLServer variable. Any backslash in the name (for a named instance) or comma (for a port separator) is stripped away to give us the Windows server name. This will work for clustered SQL Servers where the WinServer variable will take the virtual server name. The WMI Win32_Volume class is used to retrieve the capacity and free space for each NTFS volume. This class will cater for root volumes as well as well as mount points. We store the data in an array named VolumeArray

SMO is used to fetch data file and log file information (file name, size and space used). The IsAccessible property is checked to ensure that we process data for online databases only. This data is stored in an array named FileArray.

The only complicated part of the code is where we append the volume data to the file data. The idea is to create one array holding file as well as volume data. You can see that we’re aiming for the same result set we see for the screenshot for SQL Server 2012. The code achieves this by doing regex matches between the full file names and the volume names. The volume name will appear in the leading part of the full file name. If you are using mount points you’ll have two matches – one for the root volume (eg D:\) and another for the mount point (eg D:\SQLDATABASE\DATA). We obtain the mount point by doing a descending sort on matched volume names and selecting the first one. This matched volume’s data is stored in the Volume property (PSCustomObject) alongside all the other file properties for this file.

You could finish at this point and simply output FileArray to the screen, CSV, XML or HTML. The code listing goes a little further and does the equivalent of a SQL Server SUM(FileSize), SUM(FileUsedSpace) GROUP BY VolumeName, FileType. (FileType represents Data or Log). Group-Object and Measure-Object are very useful aggregation commandlets. I’m using them here to return just one of many possible result sets:

The script analyses database and log files only. Backup space utilisation could be analysed using a similar approach.

Using Powershell to read trace files

Posted by sqlscope in Powershell on April 19, 2012

We know that trace files can be opened in Profiler and manually examined. They can also be imported into SQL Server database tables and queried using fn_trace_gettable. The problem with these approaches is that they are time consuming and, for fn_trace_gettable, require database storage before queries can be run.

An alternative is to use Powershell. Credit for this approach goes to the authors of this post:

http://www.solidq.com/sqj/Documents/2010_December_Issue/sqj_006_pag._33-38.pdf

As mentioned in this post, the trace objects are not supported on 64 bit platforms. To avoid run time errors you need to run the commands or script in a Powershell x86 command line shell.

Here is an example of using Powershell to count the number of occurrences of a SQL statement within a set of trace files. The count is returned in terms of number of occurrences per minute. A possible use-case would be where you have a very frequently running SQL statement and you wish to trend its execution:

# Credit: http://www.solidq.com/sqj/Documents/2010_December_Issue/sqj_006_pag._33-38.pdf

[System.Reflection.Assembly]::LoadWithPartialName("Microsoft.SqlServer.ConnectionInfoExtended") | Out-Null;

$InputTraceDirectory = "D:\traces-in";

$TraceFilePattern = "*.trc";

# Hash to store occurrences per minute

$Occurrences = @{};

Get-ChildItem $InputTraceDirectory -Filter $TraceFilePattern | ForEach-Object {

$InputFilename = $_.FullName;

Write-Host ("Processing file " + $InputFilename);

$InputTraceFile = New-Object "Microsoft.SqlServer.Management.Trace.Tracefile";

$InputTraceFile.InitializeAsReader($InputFilename);

While ($InputTraceFile.Read()) {

If ($InputTraceFile.GetValue($InputTraceFile.GetOrdinal("EventClass")) -eq "SQL:BatchCompleted") {

If ($InputTraceFile.GetValue($InputTraceFile.GetOrdinal("TextData")) -match "select columns from dbo.mytable") {

$EndTime = $InputTraceFile.GetValue($InputTraceFile.GetOrdinal("EndTime"));

# Date doesn't matter. Start time set to 00:00 as we're measuring minutes since midnight

$TimeDiff = New-TimeSpan -start "1 April 2012 00:00" -end $EndTime;

# The hash key is the minute value. The hash value is the count.

$Occurrences[($TimeDiff.Hours)*60 + $TimeDiff.Minutes]++;

};

};

};

$InputTraceFile.Close();

};

$Occurrences.GetEnumerator() | Sort-Object Key | Select-Object @{Label="Time";Expression={([System.DateTime]"1 April 2012 00:00").AddMinutes($_.Key)}},Value | ConvertTo-CSV;

As commented in the source code, the date part is irrelevant. This would be appropriate where you are processing, for example, an afternoon’s worth of trace files. The CSV output can be saved as a file, opened in Excel and charted.