If some of your workloads are able to tolerate a certain amount of data loss and they are already bottlenecked on the write performance of the transaction log then SQL Server 2014’s delayed durability option will probably give you immediate performance boost. One use case that comes to mind is staging databases where ETL work is being performed. Some of the data feeds may be implemented as single row inserts as opposed to batch inserts. Within the application environment you may have no options available to convert to batch inserts (ie multiple inserts in one transaction). This post will show the performance improvements you could see by enabling delayed durability.

Single row inserts going into this table:

CREATE TABLE dbo.TestingData ( id INT NOT NULL IDENTITY, col1 INT NOT NULL DEFAULT 2000, col2 VARCHAR(50) NOT NULL DEFAULT 'wxyz' )

Test script inserting 10000 rows:

SET NOCOUNT ON DECLARE @i INT = 1 WHILE @i <= 10000 BEGIN INSERT INTO dbo.TestingData( col1, col2 ) VALUES (DEFAULT, DEFAULT) SET @i = @i + 1 END

Toggle the delayed durability option using this:

ALTER DATABASE [DelayedDurabilityTest] SET DELAYED_DURABILITY = <FORCED or DISABLED> WITH NO_WAIT

Results inserting 10000 rows (captured using before and after sampling of sys.dm_io_virtual_file_stats):

sampletime file_id reads readbytes bytesperread writes writebytes bytesperwrite

2014-04-21 10:22:02.967 1 0 0 0 0 0 0

2014-04-21 10:22:02.967 2 0 0 0 10000 5154816 515

2014-04-21 11:00:01.947 1 0 0 0 0 0 0

2014-04-21 11:00:01.947 2 0 0 0 1733 4046336 2334

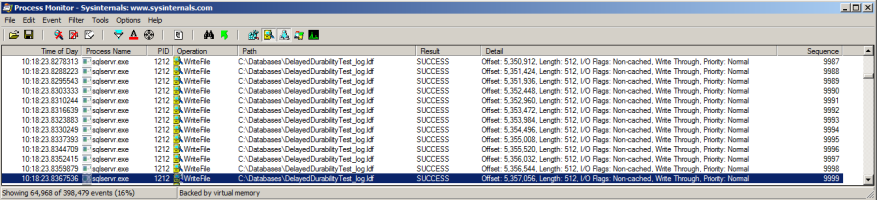

The test at 10:22 was conducted with delayed durability disabled (the default). File id 1 is the data file, file id 2 is the log file. The test at 11:00 was conducted with delayed durability set to forced. You can see the reduced number of writes to the transaction log and the higher figure for bytes per write. The sysinternals ProcessMonitor utility confirms these details. For the 10:22 test we see this:

The Sequence column starts at 0 so this is showing us 10000 writes each being 512 bytes (matches the results from sys.dm_io_virtual_file_stats). For the 11:00 test we see this:

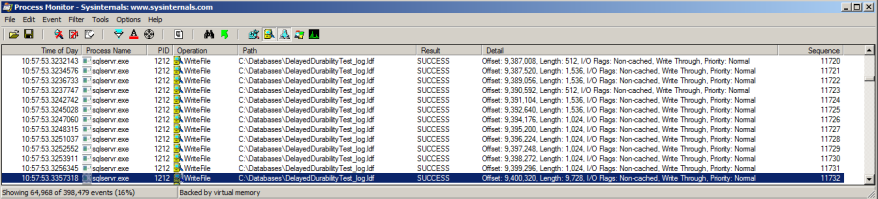

The Sequence column started at 10000, it ends at 11732 so that’s 1733 write IOs matching the figure from the DMV. The Detail column shows the larger write sizes.

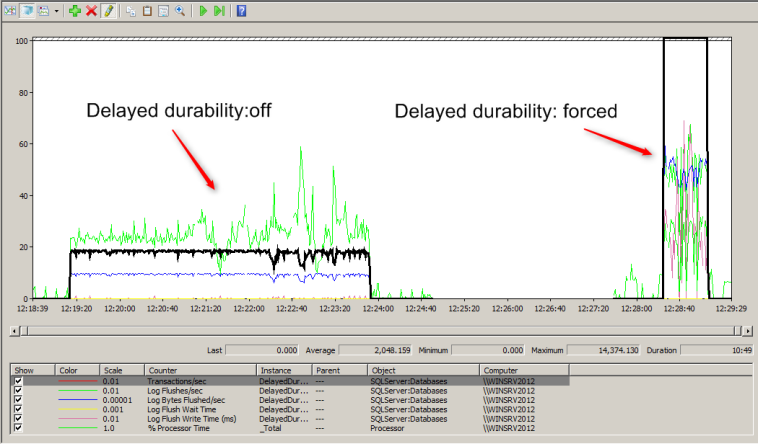

Following this simple test I changed 10000 to 500000 in the while loop that does the insert and captured the performance monitor counters for transactions/sec, log flushes and %processor time. Here are the results:

The execution took over five minutes with the option disabled and forty seconds when it was set to forced. You can see in black the improvement in transactions/sec

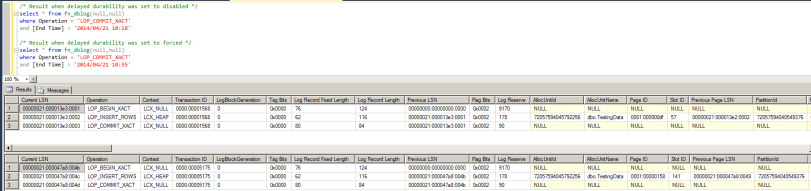

The actual transaction log content is identical for both executions (not surprising as the option simply controls when the log buffer is written to disk, not its content). Here are the results for two transaction entries using fn_dblog:

Delayed durability is available in all editions of SQL Server 2014. For workloads that can tolerate a certain amount of data loss you’ll probably notice an improvement in your transaction processing rate.